I came across an article in a Sunday newspaper a couple of weeks ago about an artist called xxxy who has created an installation using a BCI of sorts. I’m piecing this together from what I read in the paper and what I could see on his site, but the general idea is this: person wears a portable EEG rig (I don’t recognise the model) and is placed in a harness with wires reaching up and up and up into the ceiling. The person closes their eyes and relaxes – presumably as they enter a state of alpha augmentation, they begin to levitate courtesy of the wires. The more that they relax or the longer they sustain that state, the higher they go. It’s hard to tell from the video, but the person seems to be suspended around 25-30 feet in the air.

BCI

Emotiv EPOC and the triple dilemma of early adoption

The UK version of Wired magazine ran an article in last month’s edition (no online version available) about Emotiv and the development of the EPOC headset. Much of the article focused on the human side of the story, the writer mixed biographical details of company founders with how the ideas driving the development of the headset came together. I’ve written about Emotiv before here on a specific technical issue. I still haven’t had any direct experience of the system, but I’d like to write about the EPOC again because it’s emerging as the headset of choice for early adopters.

In this article, I’d like to discuss a number of dilemmas that are faced by both the company and their customers. These issues aren’t specific to Emotiv, they hold for other companies in the process of selling/developing hardware for physiological computing systems.

Functional vocabulary: an issue for Emotiv and Brain-Computer Interfaces

The Emotiv system is a EEG headset designed for the development of brain-computer interfaces. It uses 12 dry electrodes (i.e. no gel necessary), communicates wirelessly with a PC and comes with a range of development software to create applications and interfaces. If you watch this 10min video from TEDGlobal, you get a good overview of how the system works.

First of all, I haven’t had any hands-on experience with the Emotiv headset and these observations are based upon what I’ve seen and read online. But the talk at TED prompted a number of technical questions that I’ve been unable to satisfy in absence of working directly with the system.

Continue reading

The Extended Nervous System

I’d like to begin the new year on a philosophical note. A lot of research in physiological computing is concerned with the practicalities of developing this technology. But what about the conceptual implications of using these systems (assuming that they are constructed and reach the marketplace)? At a fundamental level, physiological computing represents an extension of the human nervous system. This is nothing new. Our history is littered with tools and artifacts, from the plough to the internet, designed to extend the ‘reach’ of human senses capabilities. As our technology becomes more compact, we become increasingly reliant on tools to augment our cognitive capacity. This can be as trivial as using the address book on a mobile phone as a shortcut to “remembering” a friend’s number or having an electronic reminder of an imminent appointment. This kind of “scaffolded thinking” (Clark, 2004) represents a merger between a human limitation (long-term memory) and a technological solution, we’ve effectively subcontracted part of our internal cognitive store to an external silicon one. Andy Clark argues persuasively in his book that these human-machine mergers are perfectly natural consequence of human-technology co-evolution.

If we use technology to extend the human nervous system, does this also represent a natural consequence of the evolutionary trajectory that we share with machines? It is one thing to delegate information storage to a machine but granting access to the central nervous system, including the inner sanctum of the brain, represents a much more intimate category of human-machine merger.

In the case of muscle interfaces, where EMG activity or eye movements function as proxies of a mouse or touchpad input, I feel the nervous system has been extended in a modest way – gestures are simply recorded at a different place, rather than looking and pointing, you can now just look. BCIs represent a more interesting case. Many are designed to completely circumvent the conventional motor component of input control. This makes BCIs brilliant candidates for assistive technology and effective usage of a BCI device feels slightly magical – because it is the ultimate in remote control. But like muscle interfaces, all we have done is create an alternative route for human-computer input. The exciting subtext to BCI use is how the user learns to self-regulate brain activity in order to successfully operate this category of technology. The volitional control of brain activity seems like an extension of the human nervous system in my view (or to be more specific, an extension of how we control the human nervous system), albeit one that occurs as a side effect or consequence of technology use.

Technologies based on biofeedback mechanics, such as biocybernetic adaptation and ambulatory monitoring, literally extend the human nervous system by transforming a feeling/thought/experience that is private, vague and subjective into an observable representation that is public, quantified and objective. In addition, biocybernetic systems that monitor changes in physiology to trigger adaptive system responses take the concept further – these systems don’t merely represent the activity of the nervous system, they are capable of acting on the basis of this activity, completely bypassing human awareness if necessary. That prospect may alarm many but one shouldn’t be too disturbed – the autonomic nervous system routinely does hundreds of things every minute just to keep us conscious and alert – without ever asking or intruding on consciousness. Of course the process of autonomic control can run amiss, take panic attacks as one example, and it is telling that biofeedback represents one way to correct this instance of autonomic malfunction. The therapy works by making a hidden activity quantifiable and open to inspection, and in doing so, provides the means for the individual to “retrain” their own autonomic system via conscious control. This dynamic runs through those systems concerned with biocybernetic control and ambulatory monitoring. Changes at the user interface provide feedback on emotion or cognition and invite the user to extend self-awareness, and in doing so, to enhance control over their own central nervous systems. As N. Katherine Hayles puts it in her book on posthumanism: “When the body is integrated into a cybernetic circuit, modification of the circuit will necessarily modify consciousness as well. Connected to multiple feedback loops to the objects it designs, the mind is also an object of design.”

So, really what we’re talking about is extending our human nervous systems via technology and in doing so, enhancing our ability to self-regulate our human nervous systems. To slightly adapt a phrase from the autopoietic analysis of the nervous system, we are observing systems observing ourselves observing (ourselves).

It has been argued by Rosalind Picard among others that increased self-awareness and self-control of bodily states is a positive aspect of this kind of technology. In some cases, such as anger management and stress reduction, I can see clear arguments to support this position. On the other hand, I can also see potential for confusion and distress due to disembodiment (I don’t feel angry but the computer says I do – so which is me?) and invasion of privacy (I know you say you’re not angry but the computer says you are).

If we are to extend the nervous system, I believe we must also extend our conception of the self – beyond the boundaries of the skull and the skin – in order to incorporate feedback from a computer system into our strategies for self-regulation. But we should not be sucked into a simplistic conflicts by these devices. As N. Katherine Hayles points out, border crossings between humans and machines are achieved by analogy, not simple re-representation – the quantified self out there and the subjective self in here occupy different but overlapping spheres of experience. We must bear this in mind if we, as users of this technology, are to reconcile the plentitude of embodiment with the relative sparseness of biofeedback.

Categories of Physiological Computing

In my last post I articulated a concern about how the name adopted by this field may drive the research in one direction or another. I’ve adopted the Physiological Computing (PC) label because it covers the widest range of possible systems. Whilst the PC label is broad, generic and probably vague, it does cover a lot of different possibilities without getting into the tortured semantics of categories, sub-categories and sub- sub-categories.

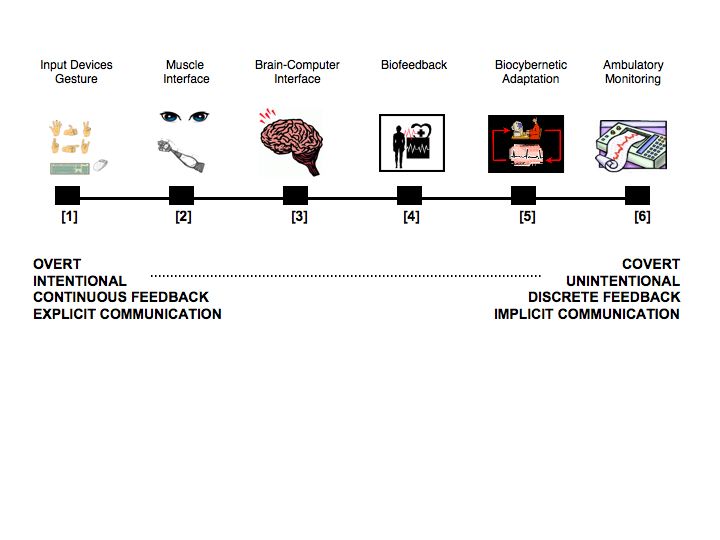

I’ve defined PC as a computer system that uses real-time bio-electrical activity as input data. At one level, moving a mouse (or a Wii) with your hand represents a form of physiological computing as do physical interfaces based on gestures – as both are ultimately based on muscle potentials. But that seems a little pedantic. In my view, the PC concept begins with Muscle Interfaces (e.g. eye movements) where the electrical activity of muscles is translated into gestures or movements in 2D space. Brain-Computer Interfaces (BCI) represent a second category where the electrical activity of the cortex is converted into input control. Biofeedback represents the ‘parent’ of this category of technology and was ultimately developed as a control device, to train the user how to manipulate the autonomic nervous system. By contrast, systems involving biocybernetic adaptation passively monitor spontaneous activity from the central nervous system and translate these signals into real-time software adaptation – most forms of affective computing fall into this category. Finally, we have the ‘black box’ category of ambulatory recording where physiological data are continuously recorded and reviewed at some later point in time by the user or medical personnel.

I’ve tried to capture these different categories in the diagram below. The differences between each grouping lie on a continuum from overt observable physical activity to covert changes in psychophysiology. Some are intended to function as explicit forms of intentional communication with continuous feedback, others are implicit with little intentionality on the part of the user. Also, there is huge overlap between the five different categories of PC: most involve a component of biofeedback and all will eventually rely on ambulatory monitoring in order to function. What I’ve tried to do is sketch out the territory in the most inclusive way possible. This inclusive scheme also makes hybrid systems easier to imagine, e.g. BCI + biocybernetic adaptation, muscle interface + BCI – basically we have systems (2) and (3) designed as input control, either of which may be combined with (5) because it operates in a different way and at a different level of the HCI.

As usual, all comments welcome.

Five Categories of Physiological Computing

What’s in a name?

I attended a workshop earlier this year entitled aBCI (affective Brain Computer Interfaces) as part of the ACII conference in Amsterdam. In the evening we discussed what we should call this area of research on systems that use real-time psychophysiology as an input to a computing system. I’ve always called it ‘Physiological Computing’ but some thought this label was too vague and generic (which is a fair criticism). Others were in favour of something that involved BCI in the title – such as Thorsten Zander‘s definitions of passive vs. active BCI.

As the debate went on, it seemed that we were discussing was an exercise in ‘branding’ as opposed to literal definition. There’s nothing wrong with that, it’s important that nascent areas of investigation represent themselves in a way that is attractive to potential sponsors. However, I have three main objections to the BCI label as an umbrella term for this research: (1) BCI research is identified with EEG measures, (2) BCI remains a highly specialised domain with the vast majority of research conducted on clinical groups and (3) BCI is associated with the use of psychophysiology as a substitute for input control devices. In other words, BCI isn’t sufficiently generic to cope with: autonomic measures, real-time adaptation, muscle interfaces, health monitoring etc.

My favoured term is vague and generic, but it is very inclusive. In my opinion, the primary obstacle facing the development of these systems is the fractured nature of the research area. Research on these systems is multidisciplinary, involving computer science, psychology and engineering. A number of different system concepts are out there, such as BCI vs. concepts from affective computing. Some are intended to function as alternative forms of input control, others are designed to detect discrete psychological states. Others use autonomic variables as opposed to EEG measures, some try to combine psychophysiology with overt changes in behaviour. This diversity makes the area fun to work in but also makes it difficult to pin down. At this early stage, there’s an awful lot going on and I think we need a generic label to both fully exploit synergies, and most importantly, to make sure nothing gets ruled out.

Brain, Body and Bytes Workshop: CHI 2010

Affective Comp/BCI workshop – deadline extension

The workshop on affective computing and BCI in Amsterdam this September has extended its deadline to 22nd June for all papers. Website for workshop here

Physiological Computing F.A.Q.

This post is out of date, please see the dedicated FAQ page for the latest revisions.

1. What is physiological computing?

Physiological Computing is a term used to describe any computing system that uses real-time physiological data as an input stream to control the user interface. A physiological computing system takes psychophysiological information from the user, such as heart rate or brain activity, and uses these data to make the software respond in real-time. The development of physiological computing is a multidisciplinary field of research involving contributions from psychology, neuroscience, engineering, & computer science.

2. How does physiological computing work?

Physiological computing systems collect physiological signals, analyse them in real-time and use this analysis as an input for computer control. This cycle of data collection, analysis, interpretation is encapsulated within a biocybernetic control loop.

This loop describes how eye movements may be captured and translated into up/down and left/right commands for cursor control. The same flow of information can be used to represent how changes in electrocortical activity (EEG) of the brain can be used to control the movement of an avatar in a virtual world or to activate/deactivate system automation. With respect to an affective computing application, a change in physiological activity, such as increased blood pressure, may indicate higher levels of frustration and the system may respond with help information. The same cycle of collection-analysis-translation-response is apparent. Alternatively, physiological data may be logged and simply represented to the user or a medical professional; this kind of ambulatory monitoring doesn’t involve human-computer communication but is concerned with the enhancement of human-human interaction.

3. Give me some examples.

Researchers became interested in physiological computing in the 1990s. A group based at NASA developed a system that measured user engagement (whether the person was paying attention or not) using the electrical activity of the brain. This measure was used to control an autopilot facility during simulated flight deck operation. If the person was paying attention, they were allowed to use the autopilot; if attention lapsed, the autopilot was switched off – therefore, prompting the pilot into manual control in order to re-engage with the task.

Physiological computing was also used by MIT Media Lab during their investigations into affective computing. These researchers were interested in how psychophysiological data could represent the emotional status of the user – and enable the computer to respond to user emotion. For example by offering help if the user was irritated by the system.

Physiological computing has been applied to a range of software application and technologies, such as: robotics (making robots aware of the psychological status of their human co-workers), telemedicine (using physiological data to diagnose both health and psychological state), computer-based learning (monitoring the attention and emotions of the student) and computer games.

4. Is the Wii an example of physiological computing?

In a way. The Wii monitors movement and translates that movement into a control input in the same way as a mouse. Physiological computing, as defined here, is quite different. First of all, these systems focus on hidden psychological states rather than obvious physical movements. Secondly, the user doesn’t have to move or do anything to provide input to a physiological computing system. What physiological computing does is monitor “hidden” aspects of behaviour.

5. How is physiological computing different from Brain-Computer Interfaces?

Brain-Computer Interfaces (BCI) are a category of system where the user self-regulates their physiology in order to provide input control to a computer system. For example, a user may self-regulate activity in the EEG (electroencelogram – electrical activity of the brain) in order to move a cursor on the computer screen. Effectively, BCIs offer an alternative to conventional input devices, such as the keyboard or mouse, which is particularly useful for people with disabilities.

There is some overlap between physiological computing and BCIs, but also some important differences. The physiological computing approach has been compared to “wiretapping” in the sense that it monitors changes in user psychology without requiring the user to take explicit action. Use of a BCI is associated with intentional control and requires a period of training prior to use.

6. OK. But the way you describe physiological computing sounds like a Biofeedback system….

There is some crossover between the approach used by physiological computing and biofeedback therapies. But like BCI, biofeedback is designed to help people self-regulate their physiological activity, i.e. to reduce the rate of breathing for those who suffer from panic attacks. There is some evidence that exposing a person to a physiological computing system may prompt improved self-regulation of physiology – simply because changes at the interface of a physiological computer may be meaningful to the user, i.e. if the computer does this, it means I’m stressed and need to relax.

The use of computer games to enhance biofeedback training represents the type of system that brings both physiological computing and biofeedback together. For example, systems have been developed to treat Attention-Deficit Hyperactivity Disorder (ADHD) where children are trained to control brain activity by playing a computer game – see this link for more info.

7. Can I buy a physiological computer?

You can buy systems that use psychophysiology for human-computer interaction. For example, a number of headsets are on the market that have been developed by Emotiv and Neurosky to be used as an alternative to a keyboard or mouse. At the moment, commercial systems fall mainly into the BCI application domain. There are also a number of biofeedback games that also fall into the category of physiological computing, such as The Wild Divine .

8. What do you need in order to create a physiological computer?

In terms of hardware, you need psychophysiological sensors (such as a GSR sensor or heart rate monitoring apparatus or EEG electrodes) that are connected to an analogue-digital converter. These digital signals can be streamed to a computer via ethernet. On the software side, you need an API or equivalent to access the signals and you’ll need to develop software that converts incoming physiological signals into a variable that can be used as a potential control input to an existing software package, such as a game. Of course, none of this is straightforward because you need to understand something about psycho-physiological associations (i.e. how changes in physiology can be interpreted in psychological terms) in order to make your system work.

9. What is it like that I have experienced?

That’s hard to say because there isn’t very much apparatus like this generally available. If you’ve ever worn ECG sensors in either a clinical or sporting setting, you’ll know what it’s like to see your physiological activity “mirrored” in this way. That’s one aspect. The closest equivalent is biofeedback, where physiological data is represented as a visual display or a sound in real-time, but biofeedback is relatively specialised and used mainly to treat clinical problems.

10. A lot of the technology involved sounds ‘medical’. Is this something hospitals would use?

The sensor technology is widely used by medical professionals to diagnose physiological problems and to monitor physiological activity. Physiological computing represents an attempt to bring this technology to a more mainstream population by using the same monitoring technology to improve human-computer interaction. In order to do this, it’s important to move the sensor technology from the static systems where the person is tethered by wires (as used by hospitals) to mobile, lightweight sensor apparatus that people can wear comfortably and unhindered as they work and play.

11. Who is working on this stuff?

Physiological computing is inherently multidisciplinary. The business of deciding which signals to use and how they represent the psychological state of the user is the domain of psychophysiology (i.e. inferring psychological significance from physiological signals). Real-time data analysis falls into the area of signal processing that can involve professionals with backgrounds in computing, mathematics and engineering. Designing wearable sensor apparatus capable of delivering good signals outside of the lab or clinical environment is of interest to people working in engineering and telemedicine. Deciding how to use psychophysiological signals to drive real-time adaptation is the domain of computer scientists, particularly those interested in human-computer interaction and human factors.

12. What can a physiological computer allow me to do that is new?

Physiological computing has the potential to offer a new scenario for how we communicate with computers. At the moment, human-computer communication is asymmetrical with respect to information exchange. Therefore, your computer can tell you lots of things about itself, such as: memory usage, download speed etc. But the computer is essentially in the dark about the person on the other side of the interaction. That’s when the computer tries to ‘second-guess’ the next thing you want to do, it normally gets it wrong, e.g. the Microsoft paperclip. By allowing the computer to access a representation of the user state, we open up the possibility of symmetrical human-computer interaction – where ‘smart’ systems adapt themselves to user behaviour in a way that’s both intuitive and timely. Therefore, in theory at least, we get help from the computer when we really need it. If the computer game is boring, the software knows to make the game more challenging. More than this, by making the computer aware of our internal state, we allow software to personalise its performance to that person with a degree of accuracy.

13. Will these systems be able to read my mind?

Psychophysiological measures can provide an indication of a person’s emotional status. For instance, it can measure whether you are alert or tired or whether you are relaxed or tense. There is some evidence that it can distinguish between positive and negative mood states. The same measures can also capture whether a person is mentally engaged with a task or not. Whether this counts as ‘reading your mind’ or not depends on your definition. The system would not be able to diagnose whether you were thinking about making a grilled cheese sandwich or a salad for lunch.

14. What about the privacy of my data?

Good question. Physiological computing inevitably involves a sustained period of monitoring the user. This information is, by definition, highly sensitive. An intruder could monitor the ebb and flow of user mood over a period of time. If the intruder could access software activity as well as physiology, he or she could determine whether this web site or document elicited a certain reaction from the user or not. Most of us regard our unexpressed emotional responses as personal and private information. In addition, data collected via physiological computing could potentially be used to indicate medical conditions such as high blood pressure or heart arrhythmia. Privacy and data protection are huge issues for this kind of technology. It is important that the user exercises ultimate control with respect to: (1) what is being measured, (2) where it is being stored, and (3) who has access to that information.

15. Where can I find out more?

There are a number of written and online sources regarding physiological computing. Almost all have been written for an academic audience. Here are a number of review articles:

Allanson, J. (2002, March 2002). Electrophysiologically interactive computer systems. IEEE Magazine.

Fairclough, S. H. 2009. Fundamentals of physiological computing. Interacting with Computers, 21, 133-145.

Gilleade, K. M., Dix, A., & Allanson, J. (2005). Affective videogames and modes of affective gaming: Assist me, challenge me, emote me. Paper presented at the Proceedings of DiGRA 2005.

Picard, R. W., & Klein, J. (2002). Computers that recognise and respond to user emotion: Theoretical and practical implications. Interacting With Computers, 14, 141-169.

Workshop on Affective Brain-Computer Interfaces

Just received notification of a 1-day workshop on Affective Computing/BCI to be held in September 09 in Amsterdam. Deadline for papers: 15th June. Full details here