There has been a lot of tweets and blogs devoted to an article written recently by Don Norman for the MIT Technology Review on wearable computing. The original article is here, but in summary, Norman points to an underlying paradox surrounding Google Glass etc. In the first instance, these technological artifacts are designed to enhance human abilities (allowing us to email on the move, navigate etc.), however, because of inherent limitations on the human information processing system, they have significant potential to degrade aspects of human performance. Think about browsing Amazon on your glasses whilst crossing a busy street and you get the idea.

The paragraph in Norman’s article that caught my attention and is most relevant to this blog is this one.

“Eventually we will be able to eavesdrop on both our own internal states and those of others. Tiny sensors and clever software will infer their emotional and mental states and our own. Worse, the inferences will often be wrong: a person’s pulse rate just went up, or their skin conductance just changed; there are many factors that could cause such things to happen, but technologists are apt to focus upon a simple, single interpretation.”

Speaking as someone who has written about physiological computing for almost ten years, I don’t recognise this conceptualisation of the technology. Of course it is possible to do all those things, but I don’t understand why we would replace our inherent human capacity to assess the emotional states of others (which is sensitive, works with minimal demands on attention and is generally quite accurate for most of us) with a technological solution. In the same article, Norman says that he is a person who believes in the power of artefacts to enhance human abilities, but the kind of functionality described is redundant because it duplicates inherent human abilities.

I have a couple of other issues with this part of the article, Norman talks about us “eavesdropping” on our internal states, which is something we do as part of everyday consciousness – and of course the psychophysiological inference is complicated, but to say it is often wrong is an oversimplification in my view.

This part of the article seemed lazy and reactionary in my opinion but it did stimulate some questions about what physiological computing is meant to do? What is the raison d’être of this technology? How will it enhance human capability and potential?

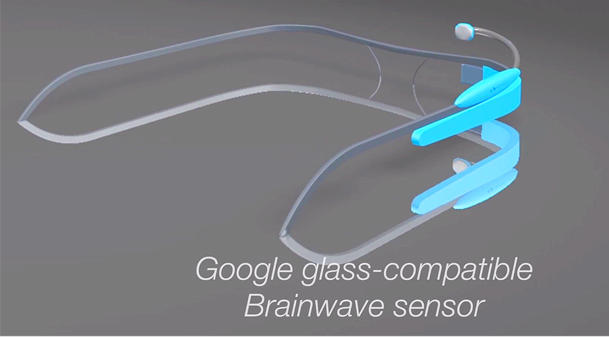

Let us consider brain-computer interfaces as our first example – in the case of able-bodied users, BCI provide a means to increase the bandwidth of communication, particularly if the person is involved in a task that is hands-busy or eyes-busy. BCI also provide variety in terms of the different ways in which we can communicate with a computer and permits the creation of novel forms of human-computer interaction. Both factors contribute to the popularity of BCI with the gaming community.

For affective computing and biocybernetic adaptation, the goal of physiological computing is to enable intelligent software adaptation. In this case, we are using technological artifacts (sensors) in order to enhance the capability of the machine rather than the person. But it is easy to imagine how some technologies, such as auto-tutoring systems, can pass the benefit of this enhancement on to the users. Those physiological computing devices designed to monitor and feedback data (such as our bodyblogging project and similar concepts from the Quantified Self) are designed to make the invisible visible – to quantify changes in behaviour and physiology in order to enhance self-regulation.

The existence of technology is effectively a justification for the creation of BCI, affective computing and biocybernetic adaptation as the ultimate purpose of these categories is to enhance and enrich human-computer interaction. For monitoring technologies, the ultimate goal is to enhance human choices and decision-making. None of these instances are redundant in the way that Norman’s example was, but redundancy is a constant threat to this category of technology that can act as a alternate source of information to our human intuition, insight and self-monitoring.

All of which brings us to some bigger questions about the ultimate purpose of technology and the end-game of human-machine co-evolution. If anyone is interested in those questions, I’d recommend Kevin Kelly’s book ‘What Technology Wants‘ for a sustained rumination on that theme. I’d also strongly recommend the writings of Peter Hancock on this topic, particularly his book ‘Mind, Machine and Morality‘. One of Hancock’s early articles on this theme ‘Teleology for Technology’ which I read back in the late nineties (you can download a pdf here) made a big impression back then and continues to reverberate today, especially as I am working with emergent technologies. One of the themes of Hancock’s writing in this paper and others is a broad conception of human factors and ergonomics that supplements the standard enquiry of ‘can we do this?’ with the less-frequently asked question of ‘should we do this?’

Like Hancock, I feel that people working in HCI and human factors are best-placed to both pose and answer both questions because it is our role to represent the user, and not just the users of today but also the users of tomorrow.