In my last post I articulated a concern about how the name adopted by this field may drive the research in one direction or another. I’ve adopted the Physiological Computing (PC) label because it covers the widest range of possible systems. Whilst the PC label is broad, generic and probably vague, it does cover a lot of different possibilities without getting into the tortured semantics of categories, sub-categories and sub- sub-categories.

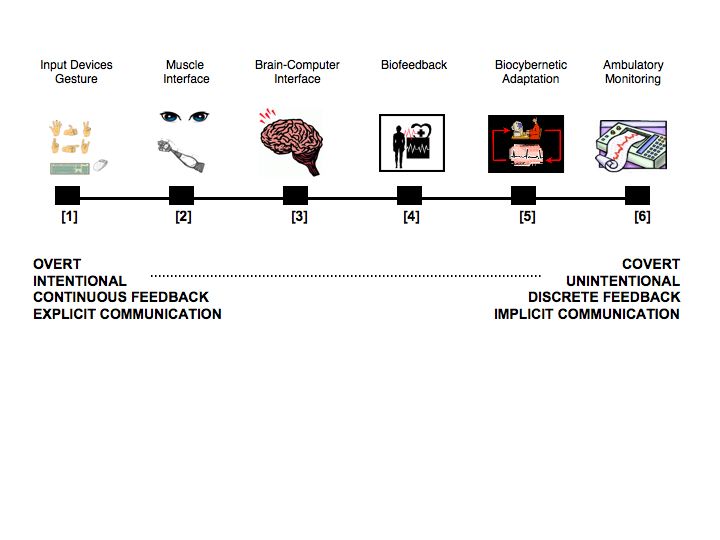

I’ve defined PC as a computer system that uses real-time bio-electrical activity as input data. At one level, moving a mouse (or a Wii) with your hand represents a form of physiological computing as do physical interfaces based on gestures – as both are ultimately based on muscle potentials. But that seems a little pedantic. In my view, the PC concept begins with Muscle Interfaces (e.g. eye movements) where the electrical activity of muscles is translated into gestures or movements in 2D space. Brain-Computer Interfaces (BCI) represent a second category where the electrical activity of the cortex is converted into input control. Biofeedback represents the ‘parent’ of this category of technology and was ultimately developed as a control device, to train the user how to manipulate the autonomic nervous system. By contrast, systems involving biocybernetic adaptation passively monitor spontaneous activity from the central nervous system and translate these signals into real-time software adaptation – most forms of affective computing fall into this category. Finally, we have the ‘black box’ category of ambulatory recording where physiological data are continuously recorded and reviewed at some later point in time by the user or medical personnel.

I’ve tried to capture these different categories in the diagram below. The differences between each grouping lie on a continuum from overt observable physical activity to covert changes in psychophysiology. Some are intended to function as explicit forms of intentional communication with continuous feedback, others are implicit with little intentionality on the part of the user. Also, there is huge overlap between the five different categories of PC: most involve a component of biofeedback and all will eventually rely on ambulatory monitoring in order to function. What I’ve tried to do is sketch out the territory in the most inclusive way possible. This inclusive scheme also makes hybrid systems easier to imagine, e.g. BCI + biocybernetic adaptation, muscle interface + BCI – basically we have systems (2) and (3) designed as input control, either of which may be combined with (5) because it operates in a different way and at a different level of the HCI.

As usual, all comments welcome.

Five Categories of Physiological Computing

Dear Steve,

I follow your blog for a while but here is my first comment.

With regards to the name of physiological computing (precedent post) I agree with you that this name is correct as long as this is a borad category that regroup ohters (like physiological emotion assessment and even more specifically emotion assessment from brain signals).

With regard to the proposed sub-categories I also like them but I will critic a bit the way you present them on this scale.

First I disagree with the continous / discrete feedback: I do not see why biocybernetic adaptation or ambulatory signals are discrete. I understand that for instance ambulatory systems give alerts only at some moments in time but in my view this remains continous since it can be at any moment (one can also say that the system continously outputs “no problem”).

Second I wonder if this scale is really continous (i.e. is it possible to say for instance that a muscule interface has “more continous feedback” than a biofeedback system or that communicating with gestures is more explicit than with muscles). In my view those factors are more distinct properties of a given system. For instance, a BCI can be unintentional with continous feedback.

Thirs it would be interesting to define the difference between the overt, intentional and explicit factors which in my view are the same. Coming back to the continous scale discussion I believe that a system is designed to fall in one of those categories (say intentional or not for instance) but it sometimes happen that the communication do not follow the expected design (becomes unintentional like when a BCI command is wrongly detected). ANother example is also an unintentional communication (like affective feedback) that becomes intentional (imagine a person able to control his/her physiological signals and fake an emotion). In this sense, yes, I agree that there might be some kind of continious scale representing an “in-between system”. But this is rather a side effect of the system rather than his design objective.

Anyways thanks for this blog, this nomenclature and this interesting discussion.

Hi

Thanks for your comment and feedback.

I don’t fundamentally disagree with the points you make about the weakness of the continuous/discrete dichotomy. The concept I was trying to convey was how some systems which are used as input devices present high fidelity feedback. For example, moving a cursor with eye movements provides continuous feedback of the relationship between physiology and events at the interface. Other systems, such as ambulatory monitoring, do operate continuously from the perspective of the sensor, but from a user interface perspective, provide a lower fidelity of feedback as data may be blocked into minutes or hours and reviewed on a retrospective basis. The continous/discrete dichotomy is meant to represent the time fidelity of feedback at the interface.

I agree that the overt/intentional/explicit categories seem to be saying the same thing and a good deal of overlap exists here. The key difference is between a system detecting a pattern of physiological activity that the user intends to produce vs. those wiretapping systems that normally fall into the category of affective computing where the physiological pattern occurs without intention on the part of the user. It seems to me that perhaps intentionality is the key distinction here. Your example of a user ‘faking’ an emotion for an affective computing system really brings that point home.

Thanks again for your comment.