[iframe width=”400″ height=”300″ src=”http://player.vimeo.com/video/32915393″]

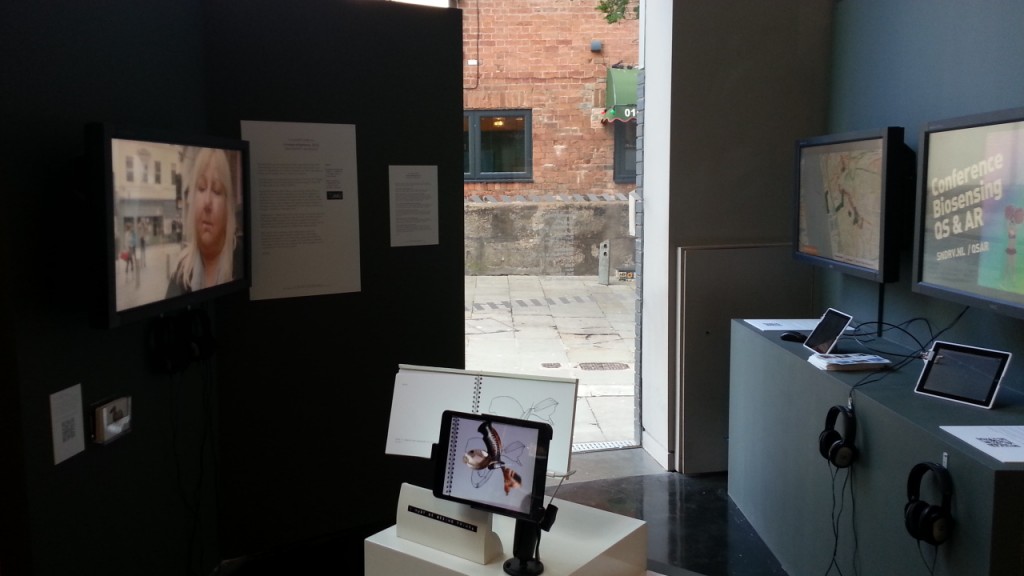

Last month I gave a presentation at the Annual Meeting of the Human Factors and Ergonomics Society held at Leeds University in the UK. I stood on the podium and presented the work, but really the people who deserve most of the credit are Marjolein van der Zwaag (from Philips Research Laboratories) and my own PhD student at LJMU Elena Spiridon.

You can watch a podcast of the talk above. This work was originally conducted as part of the REFLECT project at the end of 2010. This work was inspired by earlier research on affective computing where the system makes an adaptation to alleviate a negative mood state. The rationale here is that any such adaptation will have beneficial effects – in terms of reducing duration/intensity of negative mood, and in doing so, will mitigate any undesirable effects on behaviour or the health of the person.

Our study was concerned with the level of anger a person might experience on the road. We know that anger causes ‘load’ on the cardiovascular system as well as undesirable behaviours associated with aggressive driver. In our study, we subjected participants to a simulated driving task that was designed to make them angry – this is a protocol that we have developed at LJMU. Marjolein was interested in the effects of different types of music on the cardiovascular system while the person is experiencing a negative mood state; for our study, she created four categories of music that varied in terms of high/low activation and positive/negative valence.

The study does not represent an investigation into a physiological computing system per se, but is rather a validation study to explore whether an adaptation, such as selecting a certain type of music when a person is angry, can have beneficial effects. We’re working on a journal paper version at the moment.