I originally coined the term ‘physiological computing’ to describe a whole class of emerging technologies constructed around closed-loop control. These technologies collected implicit measures from the brain and body of the user, which informed a process of intelligent adaptation at the user interface.

If you survey research in this field, from mental workload monitoring to applications in affective computing, there’s an overwhelming bias towards the first part of the closed-loop – the business of designing sensors, collecting data and classifying psychological states. In contrast, you see very little on what happens at the interface once target states have been detected. The dearth of work on intelligent adaptation is a problem because signal processing protocols and machine learning algorithms are being developed in a vacuum – without any context for usage. This disconnect both neglects and negates the holistic nature of closed-loop control and the direct link between classification and adaptation. We can even generate a maxim to describe the relationship between the two:

the number of states recognised by a physiological computing system should be minimum required to support the range of adaptive options that can be delivered at the interface

This maxim minimises the number of states to enhance classification accuracy, while making an explicit link between the act of measurement at the first part of the loop with the process of adaptation that is the last link in the chain.

If this kind of stuff sounds abstract or of limited relevance to the research community, it shouldn’t. If we look at research into the classic ‘active’ BCI paradigm, there is clear continuity between state classification and corresponding actions at the interface. This continuity owes its prominence to the fact that the BCI research community is dedicated to enhancing the lives of end users and the utility of the system lies at the core of their research process. But to be fair, the link between brain activation and input control is direct and easy to conceptualise in the ‘active’ BCI paradigm. For those systems that working on an implicit basis, detection of the target state is merely the jumping off point for a complicated process of user interface design.

One method for understanding adaptation within physiological computing systems is to look to natural sciences for basic concepts and definitions. According to biological science, adaptation has three distinct but related interpretations. The first refers to a dynamic process by which an entity adapts to the environment. The same word also describes the resulting state of evolutionary fitness achieved through that process. A third definition refers to characteristic of the entity that is shaped by a process of natural selection. For biologists, adaptation is simultaneously an evolving process, a temporary condition and a stable trait.

If we apply this metaphor to closed-loop technologies, we characterise the technology as an adaptive entity and the user as the environment. The technology, like a biological organism, comes equipped with a finite repertoire of adaptive possibilities, whereas the user is complex, changeable and able to encompass contradictory characteristics, just like a living ecosystem.

Through the prism of this metaphor, intelligent adaptation is a dynamic process of adjustment through repeated interaction with the user. The system tries to ‘fit’ its response to stay in alignment with the goals of the user. A technological system can only achieve a degree of fit by receiving feedback from the user when an adaptation occurs at the interface. This feedback could be as simple as a manual response, for example, a ‘cancel’ button when the user does like or approve of the adaptive response. This is where the importance of a repertoire of adaptive responses comes into play. If the user has an option of disapproving of option x then the system must have options y and z to present as alternatives. These options function as heritable traits in this Darwinian metaphor and those adaptive responses that meet the approval of the user are more likely to occur than others that prompted the user to hit the ‘cancel’ button. As an aside, you can see this process in action via neuroadaptive technology where implicit changes in neurophysiology are used to inform a process of reinforcement learning that favours desirables responses from a repertoire.

This generative process of pruning the adaptive repertoire is shaped by task-related factors and the preferences of the individual, both of which are likely to change over time. For example, the user spends more time on the task, their level of skill improves and adaptation a, which worked fine when they were a novice, is now intrusive and annoying – hence the system must recognise this change of skill level and move to adaptation b designed for expert users. The process of intelligent adaptation should be sensitive to both inter-individual differences, e.g. Bob likes option x whereas Mike prefers option y, and the kind of intra-individual changes that are characteristic of the transition from novice to expert.

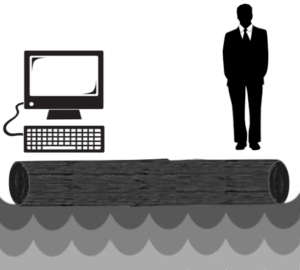

There’s a couple of metaphors that describe implicit modes of human-computer interaction. When talking about system automation, people have spoken of the ‘H-metaphor’ wherein the relationship between rider and horse describes how an operator remains in the loop during autonomous operations. In the case of closed-loop control and intelligent adaptation, the user is not directing the interaction in an explicit way, but rather responding to events at the interface. My preferred metaphor for the generative and fluctuating relationship between person and computer is the lumberjack log roll. We have two people, or in this case a computer and a person, attempting to locate a stable equilibrium by responding reactively to the actions of the other. This metaphor also captures both the technical challenge and potential pitfalls of designing technology that implicitly synchronises with user goals. To put it bluntly, if you ever watch a lumberjack log roll, the only sure thing is that at some point one or both of the players are going to get wet – and our process of intelligent adaptation must be sufficiently robust to recover from this worst case scenario.

At the end of this process, the designer can identify what worked and what did not, or to put it more formally, which adaptive options were used frequently and repeatedly and which fell rapidly into disuse. This survival analysis of the design process was shaped by implicit interaction and can feed back into the range of adaptive options that are provided in the repertoire of future systems, the same data can also indicate whether certain options are preferred by specific types of users, e.g. expert vs. novice.

My point is that intelligent adaptation is a generic and generative process that goes beyond the specifics of how we design the interface of an adaptive game or auto-tutor or robot. It also requires our urgent attention if we’re to design closed-loop systems in a way where the ends are truly integrated with the means.