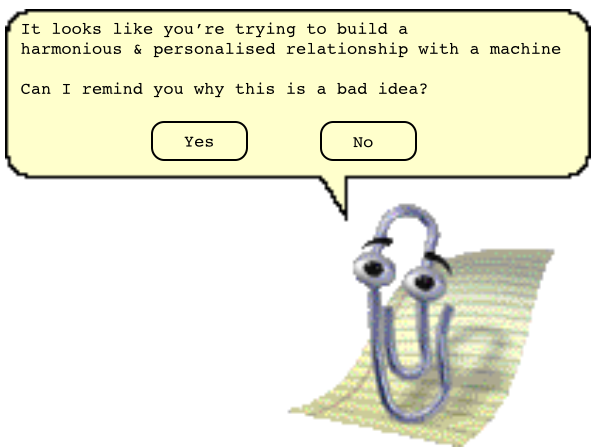

Everyone who used MS Office between 1997 and 2003 remembers Clippy. He was a help avatar designed to interact with the user in a way that was both personable and predictive. He was a friendly sales assistant combined with a butler who anticipated all your needs. At least, that was the idea. In reality, Clippy fell well short of those expectations, he was probably the most loathed feature of those particular operating systems; he even featured in this Time Magazine list of world’s worst inventions, a list that also includes Agent Orange and the Segway.

In an ideal world, Clippy would have responded to user behaviour in ways that were intuitive, timely and helpful. In reality, his functionality was limited, his appearance often intrusive and his intuition was way off. Clippy irritated so completely that his legacy lives on over ten years later. If you describe the concept of an intelligent adaptive interface to most people, half of them recall the dreadful experience of Clippy and the rest will probably be thinking about HAL from 2001: A Space Odyssey. With those kinds of role models, it’s not difficult to understand why users are in no great hurry to embrace intelligent adaptation at the interface.

In the years since Clippy passed, the debate around machine intelligence has placed greater emphasis on the improvisational spark that is fundamental to displays of human intellect. This recent article in MIT Technology Review makes the point that a “conversation” with Eugene Goostman (the chatter bot who won a Turing Test competition at Bletchley Park in 2012) lacks the natural “back and forth” of human-human communication. Modern expectations of machine intelligence go beyond a simple imitation game within highly-structured rules, users are looking for a level of spontaneity and nuance that resonates with their human sense of what other people are.

But one of the biggest problems with Clippy was not simply intrusiveness but the fact that his repertoire of responses was very constrained, he could ask if you were writing a letter (remember those?) and precious little else.

The previous post described how technology can monitor the context of the user in order to produce intelligent adaptation at the interface. The first problem concerns making an accurate assessment of user state or behaviour. It is easy to see how Clippy might have been improved by a rudimentary awareness of human behaviour that went beyond input at the keyboard. In hindsight, Clippy faced the enormous handicap of making predictions about human intention on the basis of an asymmetrical mode of human-computer interaction. This phrase describes the typical scenario where a user works in a state of complete awareness about computer capacities and functions whereas the machine works in the dark with zero knowledge of the user behaviour, moods or intentions (see this paper for full explanation).

Let us imagine that Clippy could “read” the user correctly based on some multimodal combination of overt behaviour and covert psychophysiology as described in the last post. Would he have really been transformed into a useful, or at least, a less annoying interface feature? With respect to the latter, if Clippy was part of a symmetrical human-computer interaction (where both user and system are equally well-informed about the status of each other), he could at least have worked out when he was being intrusively irritating and perhaps quietly withdrawn from the interface.

The capacity of a machine to assess the effects of its own actions on the user represents a category of reflexive monitoring. This ability could be tremendously useful in providing feedback from the user to an adaptive system with respect to what does and what does not work for that particular individual. This 2011 paper gives a detailed description of the concept. Armed with direct user feedback, even Clippy could have adjusted his behaviour, not just to minimise any irritation but also to personalise his interventions for a specific individual.

This cycle of adaptation-feedback-adjustment as an engine for personalised computing and intelligent adaptation is logical. The availability of human data allows the system take action at the interface, e.g. to offer help or ask if they are writing a letter, which implicitly probes the preferences of the individual via their spontaneous response to that action.

Research on adaptive systems, especially in the area of affective computing, places enormous emphasis on the capacity of the machine to monitor and make accurate inferences about the psychological state of the user. As important as this is, it is only half of the story. Remember, the big problem with Clippy was his impoverished repertoire of responses, he was adaptive with a small ‘a’. If we intend physiological computing to enable a new generation of system that can personalise and harmonise with the user, we need build software with multiple options built in, where the system has n different ways of doing the same thing. Constructing a system that can detect a range of psychological states is pointless if adaptive repertoire of the machine is unable to respond to those psychological states in an intelligible fashion.

Imagine this scenario – the user is writing a long document and gets ‘stuck’ on the composition of a particular paragraph. The system detects an increase of frustration and evokes Clippy to politely suggest that the user takes a rest break. This response leaves the user positively seething, the system detects an even greater rise of frustration and quickly pulls Clippy (he tends to have that effect) and starts to discretely play relaxing music. Some users may be pacified by this response, but others will not be. In the case of the latter, the system must dig deeper into its bag of tricks, perhaps releasing the scent of lavender into the room or activating a coffee maker at the back of the room to nudge the person away from the screen for a few minutes. This is a relatively simple scenario but note how many adaptive options are necessary for the machine to demonstrate a modicum of personalisation and intelligence.

The process of linking the detection of user states to the adaptive repertoire of the a machine remains the great unspoken and unexplored area of research in the area of physiological computing. Enhancing the capacity of technology to ‘read’ the psychological state of the user without similarly upgrading the adaptive capacity of software at the interface is an exercise in futility. It is analogous to restoring the ability to hear whilst simultaneously removing the capacity for speech. We must augment the ability of machines to speak as well as ‘listening’ or ‘reading’ the user.

For monitoring the user to have any real meaning within the context of human-computer interaction, it must be tied to what the computer can do.